We provide additional resources to the University of Arizona community via our Documentation pages, access to trainings and workshops and community forums

Training Resources

Community Forums

Documentation

"Open Science is the movement to make scientific research (including publications, data, physical samples, and software) and its dissemination accessible to all levels of society, amateur or professional..." (Wikipedia)

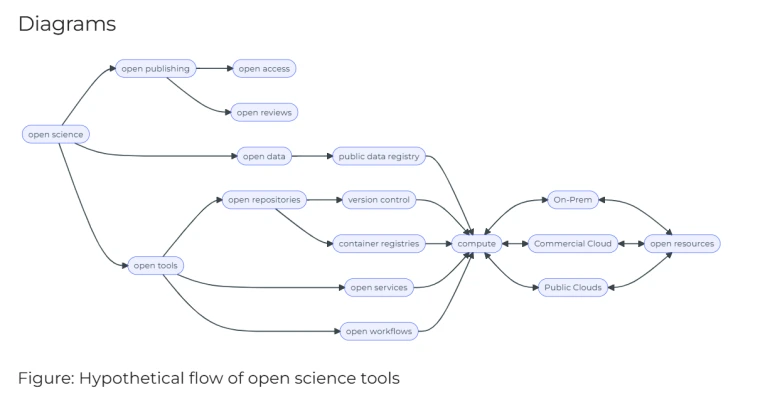

This Awesome List is compiled in the effort to help new researchers find and learn about Open Science Tools.

](https://cdn.rawgit.com/sindresorhus/awesome/d7305f38d29fed78fa85652e3a63e154dd8e8829/media/badge.svg)

Contents

UNESCO Recommendatio non Open Science

Six Pillars of Open Science

- Open methodology

- Open Source Software

- Open Data

- Open Access

- Open Peer Review

- Open Educational Resources

Reproducibility and Replicability in Science by The National Academies 2019

National Academies Report 2019

Reproducibility means computational reproducibility—obtaining consistent computational results using the same input data, computational steps, methods, code, and conditions of analysis.

Replicability means obtaining consistent results across studies aimed at answering the same scientific question, each of which has obtained its own data.

Reproducibility vs Replicability by Plesser (2018)

In Reproducibility vs. Replicability, Hans Plesser gives the following useful definitions:

Repeatability (Same team, same experimental setup): The measurement can be obtained with stated precision by the same team using the same measurement procedure, the same measuring system, under the same operating conditions, in the same location on multiple trials. For computational experiments, this means that a researcher can reliably repeat her own computation.

Replicability (Different team, same experimental setup): The measurement can be obtained with stated precision by a different team using the same measurement procedure, the same measuring system, under the same operating conditions, in the same or a different location on multiple trials. For computational experiments, this means that an independent group can obtain the same result using the author’s own artifacts.

Reproducibility (Different team, different experimental setup): The measurement can be obtained with stated precision by a different team, a different measuring system, in a different location on multiple trials. For computational experiments, this means that an independent group can obtain the same result using artifacts which they develop completely independently.

The paper goes on to further simplify:

Methods reproducibility: provide sufficient detail about procedures and data so that the same procedures could be exactly repeated.

Results reproducibility: obtain the same results from an independent study with procedures as closely matched to the original study as possible.

Inferential reproducibility: draw the same conclusions from either an independent replication of a study or a reanalysis of the original study.

Abernathey et al. (2021) propose three pillars of cloud native science

Three Pillars of Cloud Native Science

- Analysis-Ready Data (ARD)

also Analysis Ready Cloud Optimized (ARCO) formats, e.g., Cloud Optimized GeoTiff

- Data-proximate Computing

also called server-side computing, allowing computations to happen remotely

- Scalable Distributed Computing

the ability to modify the volume and number of resources used in a computational process.